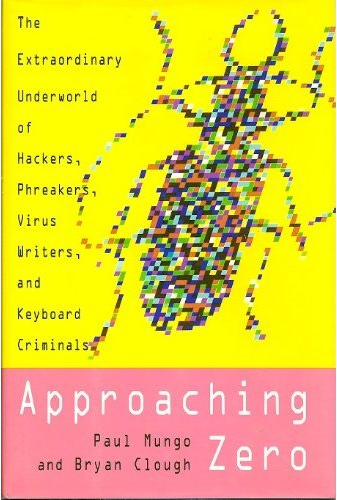

Paul Mungo, Bryan Glough

Random House

ISBN 0679409386

March 1993

Download PDF (2Mb) (You need to be registered on forum)

Download PDF (2Mb) (You need to be registered on forum)

Fry Guy watched the computer screen as the cursor blinked. Beside him a small electronic box chattered through a call routine, the numbers clicking audibly as each of the eleven digits of the phone number was dialed. Then the box made a shrill, electronic whistle, which meant that the call had gone through; Fry Guy's computer had been connected to another system hundreds of miles away.

The cursor blinked again, and the screen suddenly changed. WELCOME TO CREDIT

SYSTEMS OF AMERICA, it read, and below that, the cursor pulsed beside the prompt: ENTER ACCOUNT NUMBER.

Fry Guy smiled. He had just broken into one of the most secure computer systems in the United States, one which held the credit histories of millions of American citizens. And it had really been relatively simple. Two hours ago he had called an electronics store in Elmwood, Indiana, which-like thousands of other shops across the country-relied on Credit Systems of America to check its customers' credit cards.

"Hi, this is Joe Boyle from CSA . . . Credit Systems of America," he had said, dropping his voice two octaves to sound older-a lot older, he hoped-than his fifteen years. He also modulated his natural midwestern drawl, giving his voice an eastern twang: more big-city, more urgent.

"I need to speak to your credit manager . . . uh, what's the name? Yeah, Tom. Can you put me through?"

Tom answered.

"Tom, this is Joe Boyle from CSA. You've been having some trouble with your account?"

Tom hadn't heard of any trouble.

"No? That's really odd…. Look, I've got this report that says you've been having problems. Maybe there's a mistake somewhere down the line. Better give me your account number again."

And Tom did, obligingly reeling off the eight-character code that allowed his company to access the CSA files and confirm customer credit references. As Fry Guy continued his charade, running through a phony checklist, Tom, ever helpful, also supplied his store's confidential CSA password. Then Fry Guy keyed in the information on his home computer. "I don't know what's going on," he finally told Tom. "I'll check around and call you back."

But of course he never would. Fry Guy had all the information he needed: the account number and the password. They were the keys that would unlock the CSA computer for him. And if Tom ever phoned CSA and asked for Joe Boyle, he would find that no one at the credit bureau had ever heard of him. Joe Boyle was simply a name that Fry Guy had made up.

Fry Guy had discovered that by sounding authoritative and demonstrating his knowledge of computer systems, most of the time people believed he was who he said he was. And they gave him the information he asked for, everything from account codes and passwords to unlisted phone numbers. That was how he got the number for CSA; he just called the local telephone company's operations section.

"Hi, this is Bob Johnson, Indiana Bell tech support," he had said. "Listen, I need you to pull a file for me. Can you bring it up on your screen?"

The woman on the other end of the phone sounded uncertain. Fry Guy forged ahead, coaxing her through the routine: "Right, on your keyboard, type in K-P pulse…. Got that? Okay, now do one-two-one start, no M-A…. Okay? "Yeah? Can you read me the file? I need the number there…."

It was simply a matter of confidence-and knowing the jargon. The directions he had given her controlled access to unlisted numbers, and because he knew the routine, she had read him the CSA number, a number that is confidential, or at least not generally available to fifteen-year-old kids like himself.

But on the phone Fry Guy found that he could be anyone he wanted to be: a CSA employee or a telephone engineer-merely by pretending to be an expert. He had also taught himself to exploit the psychology of the person on the other end of the line. If they seemed confident, he would appeal to their magnanimity: "I wonder if you can help me . . ." If they appeared passive, or unsure, he would be demanding: "Look, I haven't got all day to wait around. I need that number now." And if they didn't give him what he wanted, he could always hang up and try again.

Of course, you had to know a lot about the phone system to convince an Indiana Bell employee that you were an engineer. But exploring the telecommunications networks was Fry Guy's hobby: he knew a lot about the phone system.

Now he would put this knowledge to good use. From his little home computer he had dialed up CSA, the call going from his computer to the electronic box beside it, snaking through a cable to his telephone, and then passing through the phone lines to the unlisted number, which happened to be in Delaware.

The electronic box converted Fry Guy's own computer commands to signals that could be transmitted over the phone, while in Delaware, the CSA's computer converted those pulses back into computer commands. In essence, Fry Guy's home computer was talking to its big brother across the continent, and Fry Guy would be able to make it do whatever he wanted.

But first he needed to get inside. He typed in the account number Tom had given him earlier, pressed Return, and typed in the password. There was a momentary pause, then the screen filled with the CSA logo, followed by the directory of services-the "menu."

Fry Guy was completely on his own now, although he had a good idea of what he was doing. He was going to delve deeply into the accounts section of the system, to the sector where CSA stored confidential information on individuals: their names, addresses, credit histories, bank loans, credit card numbers, and so on. But it was the credit card numbers that he really wanted. Fry Guy was short of cash, and like hundreds of other computer wizards, he had discovered how to pull off a high-tech robbery.

When Fry Guy was thirteen, in 1987, his parents had presented him with his first computer-a Commodore 64, one of the new, smaller machines designed for personal use. Fry Guy linked up the keyboard-sized system to an old television, which served as his video monitor.

On its own the Commodore didn't do much: it could play games or run short programs, but not a lot more. Even so, the machine fascinated him so much that he began to spend more and more time with it. Every day after school, he would hurry home to spend the rest of the evening and most of the night learning as much as possible about his new electronic plaything.

He didn't feel that he was missing out on anything. School bored him, and whenever he could get away with it, he skipped classes to spend more time working on the computer. He was a loner by nature; he had a lot of acquaintances at school, but no real friends, and while his peers were mostly into sports, he wasn't. He was tall and gawky and, at 140 pounds, not in the right shape to be much of an athlete. Instead he stayed at home.

About a year after he got the Commodore, he realized that he could link his computer to a larger world. With the aid of an electronic box, called a modem, and his own phone line, he could travel far beyond his home, school, and family.

He soon upgraded his system by selling off his unwanted possessions and bought a better computer, a color monitor, and various other external devices such as a printer and the electronic box that would give his computer access to the wider world. He installed three telephone lines: one linked to the computer for data transmission, one for voice, and one that could be used for either.

Eventually he stumbled across the access number to an electronic message center called Atlantic Alliance, which was run by computer hackers. It provided him with the basic information on hacking; the rest he learned from telecommunications manuals.

Often he would work on the computer for hours on end, sometimes sitting up all night hunched over the keyboard. His room was a sixties time warp filled with psychedelic posters, strobes, black lights, lava lamps, those gift-shop relics with blobs of wax floating in oil, and a collection of science fiction books. But his computer terminal transported him to a completely different world that encompassed the whole nation and girdled the globe. With the electronic box and a phone line he could cover enormous distances, jumping through an endless array of communications links and telephone exchanges, dropping down into other computer systems almost anywhere on earth. Occasionally he accessed Altos, a business computer in Munich, Germany owned by a company that was tolerant of hackers. Inevitably, it became an international message center for computer freaks.

Hackers often use large systems like these to exchange information and have electronic chats with one another, but it is against hacker code to use one's real name. Instead, they use "handles," nicknames like The Tweaker, Doc Cypher and Knightmare. Fry Guy's handle came from a commercial for McDonald's that said "We are the fry guys."

Most of the other computer hackers he met were loners like he was, but some of them worked in gangs, such as the Legion of Doom, a U.S. group, or Chaos in Germany. Fry Guy didn't join a gang, because he preferred working in solitude. Besides, if he started blabbing to other hackers, he could get busted.

Fry Guy liked to explore the phone system. Phones were more than just a means to make a call: Indiana Bell led to an immense network of exchanges and connections, to phones, to other computers, and to an international array of interconnected phone systems and data transmission links. It was an electronic highway network that was unbelievably vast.

He learned how to dial into the nearest telephone exchange on his little Commodore and hack into the switch, the computer that controls all the phones in the area. He discovered that each phone is represented by a long code, the LEN (Line Equipment Number), which assigns functions and services to the phone, such as the chosen long-distance carrier, call forwarding, and so on. He knew how to manipulate the code to reroute calls, reassign numbers, and do dozens of other tricks, but best of all, he could manipulate the code so that all his calls would be free.

After a while Indiana Bell began to seem tame. It was a convenient launching pad, but technologically speaking it was a wasteland. So he moved on to BellSouth in Atlanta, which had all of the latest communications technology. There he became so familiar with the system that the other hackers recognized it as his SoI-sphere of influence-just as a New York hacker called Phiber Optik became the king of NYNEX (the New York-New England telephone system), and another hacker called Control C claimed the Michigan network. It didn't mean that BellSouth was his alone, only that the other members of the computer underworld identified him as its best hacker.

At the age of fifteen he started using chemicals as a way of staying awake. Working at his computer terminal up to twenty hours a day, sleeping only two or three hours a night, and sometimes not at all, the chemicals-uppers, speed– kept him alert, punching away at his keyboard, exploring his new world.

But outside this private world, life was getting more confusing. Problems with school and family were beginning to accumulate, and out of pure frustration, he thought of a plan to make some money.

In 1989 Fry Guy gathered all of the elements for his first hack of CSA. He had spent two years exploring computer systems and the phone company, and each new trick he learned added one more layer to his knowledge. He had become familiar with important computer operating systems, and he knew how the phone company worked. Since his plan involved hacking into CSA and then the phone system, it was essential to be expert in both.

The hack of CSA took longer than he thought it would. The account number and password he extracted from Tom only got him through the credit bureau's front door. But the codes gave him legitimacy; to CSA he looked like any one of thousands of subscribers. Still, he needed to get into the sector that listed individuals and their accounts-he couldn't just type in a person's name, like a real CSA subscriber; he would have to go into the sector through the back door, as CSA itself would do when it needed to update its own files.

Fry Guy had spent countless hours doing just this sort of thing: every time he accessed a new computer, wherever it was, he had to learn his way around, to make the machine yield privileges ordinarily reserved for the company that owned it. He was proficient at following the menus to new sectors and breaking through the security barriers that were placed in his way. This system would yield like all the others.

It took most of the afternoon, but by the end of the day he, chanced on an area restricted to CSA staff that led the way to the account sector. He scrolled through name after name, reading personal credit histories, looking for an Indiana resident with a valid credit card.

He settled on a Visa card belonging to a Michael B. from Indianapolis; he took down his full name, account and telephone number. Exiting from the account sector, he accessed the main menu again. Now he had a name: he typed in Michael B. for a standard credit check.

Michael B., Fry Guy was pleased to see, was a financially responsible individual with a solid credit line.

Next came the easy part. Disengaging from CSA, Fry Guy directed his attention to the phone company. Hacking into a local switch in Indianapolis, he located the line equipment number for Michael B. and rerouted his incoming calls to a phone booth in Paducah, Kentucky, about 250 miles from Elmwood. Then he manipulated the phone booth's setup to make it look like a residential number, and finally rerouted the calls to the phone box to one of the three numbers on his desk. That was a bit of extra security: if anything was ever traced, he wanted the authorities to think that the whole operation had been run from Paducah.

And that itself was a private joke. Fry Guy had picked Paducah precisely because it was not the sort of town that would be home to many hackers: technology in Paducah, he snickered, was still in the Stone Age.

Now he had to move quickly. He had rerouted all of Michael B.'s incoming calls to his own phone, but didn't want to have to deal with his personal messages. He called Western Union and instructed the company to wire $687 to its office in Paducah, to be picked up by-and here he gave the alias of a friend who happened to live there. The transfer would be charged to a certain Visa card belonging to Michael B.

Then he waited. A minute or so later Western Union called Michael B.'s number to confirm the transaction. But the call had been intercepted by the reprogrammed switch, rerouted to Paducah, and from there to a phone on Fry Guy's desk.

Fry Guy answered, his voice deeper and, he hoped, the sort that would belong to a man with a decent credit line. Yes, he was Michael B., and yes, he could confirm the transaction. But seconds later, he went back into the switches and quickly reprogrammed them. The pay phone in Paducah became a pay phone again, and Michael B., though he was unaware that anything had ever been amiss, could once again receive incoming calls. The whole transaction had taken less than ten minutes.

The next day, after his friend in Kentucky had picked up the $687, Fry Guy carried out a second successful transaction, this time worth $432. He would perform the trick again and again that summer, as often as he needed to buy more computer equipment and chemicals. He didn't steal huge amounts of money– indeed, the sums he took were almost insignificant, just enough for his own needs. But Fry Guy is only one of many, just one of a legion of adolescent computer wizards worldwide, whose ability to crash through high-tech security systems, to circumvent access controls, and to penetrate files holding sensitive information, is endangering our computer-dependent societies. These technology-obsessed electronic renegades form a distinct subculture. Some steal-though most don't; some look for information; some just like to play with computer systems. Together they probably represent the future of our computer-dependent society. Welcome to the computer underworld-a metaphysical place that exists only in the web of international data communications networks, peopled by electronics wizards who have made it their recreation center, meeting ground, and home. The members of the underworld are mostly adolescents like Fry Guy who prowl through computer systems looking for information, data, links to other webs, and credit card numbers. They are often extraordinarily clever, with an intuitive feel for electronics and telecommunications, and a shared antipathy for ordinary rules and regulations.

The electronics networks were designed to speed communications around the world, to link companies and research centers, and to transfer data from computer to computer. Because they must be accessible to a large number of users, they have been targeted by computer addicts like Fry Guy-sometimes for exploration, sometimes for theft.

Almost every computer system of note has been hacked: the Pentagon, NATO, NASA, universities, military and industrial research laboratories. The cost of the depradations attributed to computer fraud has been estimated at $4 billion each year in the United States alone. And an estimated 85 percent of computer crime is not even reported.

The computer underworld can also be vindictive. In the past five years the number of malicious programs-popularly known as viruses-has increased exponentially. Viruses usually serve no useful purpose: they simply cripple computer systems and destroy data. And yet the underworld that produces them continues to flourish. In a very short time it has become a major threat to the technology-dependent societies of the Western industrial world.

Computer viruses began to spread in 1987, though most of the early bugs were jokes with playful messages, or relatively harmless programs that caused computers to play tunes. They were essentially schoolboyish tricks. But eventually some of the jokes became malicious: later viruses could delete or modify information held on computers, simulate hardware faults, or even wipe data off machines completely.

The most publicized virus of all appeared in 1992. Its arrival was heralded by the FBI, by Britain's New Scotland Yard and by Japan's International Trade Ministry, all of which issued warnings about the bug's potential for damage. It had been programmed to wipe out all data on infected computers on March 6th– the anniversary of Michelangelo's birth. The virus became known, naturally, as Michelangelo.

It was thought that the bug may have infected as many as 5 million computers worldwide, and that data worth billions of dollars was at risk. This may have been true, but the warnings from police and government agencies, and the subsequent press coverage, caused most companies to take precautions. Computer systems were cleaned out; back-up copies of data were made; the cleverer (or perhaps lazier) users simply reprogrammed their machines so that their internal calendars jumped from March 5th to March 7th, missing the dreaded 6th completely. (It was a perfectly reasonable precaution: Michelangelo will normally only strike when the computer's own calendar registers March 6.) Still, Michelangelo hasn't been eradicated. There are certainly copies of the virus still at large, probably being passed on innocently from computer user to computer user. And of course March 6th still comes once a year.

The rise of the computer underworld to the point at which a single malicious program like Michelangelo can cause law enforcement agencies, government ministries, and corporations to take special precautions, when credit bureau information can be stolen and individuals' credit card accounts can be easily plundered, began thirty years ago. Its impetus, curiously enough, was a simple decision by Bell Telephone to replace its human operators with computers.

The culture of the technological underworld was – formed in the early sixties, at a time when computers were vast pieces of complex machinery used only by big corporations and big government. It grew out of the social revolu– tion that the term the sixties has come to represent, and it remains an antiestablishment, anarchic, and sometimes "New Age" technological movement organized against a background of music, drugs, and the remains of the counterculture.

The goal of the underground was to liberate technology from the controls of state and industry, a feat that was accomplished more by accident than by design. The process began not with computers but with a fad that later became known as phreaking-a play on the wordsfreak, phone, andfree. In the beginning phreaking was a simple pastime: its purpose was nothing more than the manipulation of the Bell Telephone system in the United States, where most phreakers lived, for free long-distance phone calls.

Most of the earliest phreakers happened to be blind children, in part because it was a natural hobby for unsighted lonely youngsters. Phreaking was something they could excel at: you didn't need sight to phreak, just hearing and a talent for electronics.

Phreaking exploited the holes in Bell's long-distance, directdial system. "Ma Bell" was the company the counterculture both loved and loathed: it allowed communication, but at a price. Thus, ripping off the phone company was liberating technology, and not really criminal.

Phreakers had been carrying on their activities for almost a decade, forming an underground community of electronic pirates long before the American public had heard about them. In October 1971 Esquire magazine heralded the phreaker craze in an article by Ron Rosenbaum entitled "The Secrets of the Little Blue Box,"

the first account of phreaking in a mass-circulation publication, and still the only article to trace its beginnings. It was also undoubtedly the principal popularizer of the movement. But of course Rosenbaum was only the messenger; the subculture existed before he wrote about it and would have continued to grow even if the article had never been published. Nonetheless, his piece had an extraordinary impact: until then most Americans had thought of the phone, if they thought of it at all, as an unattractive lump of metal and plastic that sat on a desk and could be used to make and receive calls. That it was also the gateway to an Alice-in-Wonderland world where the user controlled the phone company and not vice versa was a revelation. Rosenbaum himself acknowledges that the revelations contained in his story had far more impact than he had expected at the time.

The inspiration for the first generation of phreakers was said to be a man known as Mark Bernay (though that wasn't his real name). Bernay was identified in Rosenbaum's article as a sort of electronic Pied Piper who traveled up and down the West Coast of the United States, pasting stickers in phone booths, inviting everyone to share his discovery of the mysteries of "loop-around– pairs," a mechanism that allowed users to make toll-free calls.

Bernay himself found out about loop-around-pairs from a friendly telephone company engineer, who explained that within the millions of connections and interlinked local exchanges of what in those days made up the Bell network there were test numbers used by engineers to check connections between the exchanges. These numbers often occurred in consecutive pairs, say (213)-9001 and (213)– 9002, and were wired together so that a caller to one number was automatically looped around to the other. Hence the name, loop-around-pairs. Bernay publicized the fact that if two people anywhere in the country dialed any set of consecutive test numbers, they could talk together for free. He introduced a whole generation of people to the idea that the phone company wasn't an impregnable fortress: Ma Bell had a very exploitable gap in its derenses that anyone could use, just by knowing the secret. Bernay, steeped in the ethos of the sixties, was a visionary motivated by altruism-as well as by the commonly held belief that the phone system had been magically created to be used by anyone who needed it. The seeds he planted grew, over the next years, into a full-blown social phenomenon.

Legend has it that one of the early users of Bernay's system was a young man in Seattle, who told a blind friend about it, who in turn brought the idea to a winter camp for blind kids in Los Angeles. They dispersed back to their own hometowns and told their friends, who spread the secret so rapidly that within a year blind children throughout the country were linked together by the electronic strands of the Bell system. They had created a sort of community, an electronic clubhouse, and the web they spun across the country had a single purpose: communication. The early phreakers simply wanted to talk to each other without running up huge long-distance bills.

It wasn't long, though, before the means displaced the end, and some of the early phreakers found that the technology of the phone system could provide a lot more fun than could be had by merely calling someone. In a few years phreakers would learn other skills and begin to look deeper. They found a labyrinth of electronic passages and hidden sections within the Bell network and began charting it. Then they realized they were really looking at the inside of a computer, that the Bell system was simply a giant network of terminals-known as telephones-with a vast series of switches, wires, and loops stretching all across the country. It was actual place, though it only existed at the end of a phone receiver, a nearly limitless electronic universe accessible by dialing numbers on a phone. And what made this space open to phreakers was the spread of electronic gadgets that would completely overwhelm the Bell system.

According to Bell Telephone, the first known instance of theft of long-distance telephone service by an electronic device was discovered in 1961, after a local office manager in the company's Pacific Northwest division noticed some inordinately lengthy calls to an out-of-area directory-information number. The calls were from a studio at Washington State College, and when Bell's engineers went to investigate, they found what they described as "a strange-looking device on a blue metal chassis" attached to the phone, which they immediately nicknamed a "blue box."

The color of the device was incidental, but the name stuck. Its purpose was to enable users to make free long-distance calls, and it was a huge advancement on simple loop-around-pairs: not only could the blue box set up calls to any number anywhere, it would also allow the user to roam through areas of the Bell system that were off-limits to ordinary subscribers.

The blue box was a direct result of Bell's decision in the mid 1950'S to build its new direct-dial system around multifrequency tones-musical notes generated by dialing that instruct the local exchange to route the call to a specific number. The tones weren't the same as the notes heard when pressing the numbers on a push-button phone: they were based on twelve electronically generated combinations of six master tones. These tones controlled the whole system: hence they were secret.

Or almost. In 1954 an article entitled "In-band Signal Frequency Signaling," appeared in the Bell System Technical System Journal, which described the electronic signals used for routing long-distance calls around the country, for "call completion" (hanging up), and for billing. The phone company then released the rest of its secrets when the November 1960 issue of the same journal described the frequencies of the tones used to dial the numbers.

The journal was intended only for Bell's own technical staff, but the company had apparently forgotten that most engineering colleges subscribed to it as well. The articles proved to be the combination to Bell's safe. Belatedly realizing its error, Bell tried to recall the two issues. But they had already become collectors' items, endlessly photocopied and passed around among engineering students all over the country.

Once Bell's tone system was known, it was relatively simple for engineering students to reproduce the tones, and then-by knowing the signaling methods-to employ them to get around the billing system. The early blue boxes used vacuum tubes (the forerunners of transistors) and were just slightly larger than the telephones they were connected to. They were really nothing more than a device that reproduced Bell's multifrequency tones, and for that reason hard-core phreakers called them MF-ers-for multifrequency transmitters. (The acronym was also understood to stand for "motherfuckers," because they were used to fuck around with Ma Bell.) Engineering students have always been notorious for attempting to rip off the phone company. In the late 1950S Bell was making strenuous efforts to stamp out a device that much later was nicknamed the red box-presumably to distinguish it from the blue box. The red box was a primitive gizmo, often no more than an army-surplus field telephone or a modified standard phone linked to an operating Bell set. Legend has it that engineering students would wire up a red box for Mom and Dad before they left for college so that they could call home for free. Technically very simple, red boxes employed a switch that would send a signal to the local telephone office to indicate that the phone had been picked up. But the signal was momentary, just long enough to alert the local office and cause the ringing to stop, but not long enough to send the signal to the telephone office in the city where the call was originated. That was the trick: the billing was set up in the originating office, and to the originating office it would seem as though the phone was still ringing. When Pop took his finger off the switch on the box, he and Junior could talk free of charge.

The red boxes had one serious drawback: the phone company could become suspicious if it found that Junior had ostensibly spent a half an hour listening to the phone ring back at the family homestead. A more obvious problem was that Mom and Pop-if one believes the legend that red boxes were used by college kids to call home-would quickly tire of their role in ripping off the phone company only to make it easier for Junior to call and ask for more money.

Inevitably there were other boxes, too, all exploiting other holes in the Bell system. A later variation of the red box, sometimes called a black box, was popular with bookies. It caused the ringing to cease prior to the phone being picked up, thereby preventing the originating offlce from billing the call. There was also another sort of red box that imitated the sound of coins being dropped into the slot on pay phones. It was used to convince operators that a call was being paid for.

The blue box, however, was the most sophisticated of all. It put users directly in control of long-distance switching equipment. To avoid toll-call charges, users of blue boxes would dial free numbers-out-of-area directory enquiries or commercial 1-800 numbers-then reroute the call by using the tones in the MF-er.

This is how it worked: long-distance calls are first routed through a subscriber's own local telephone office. The first digits tell the office that the call is long-distance, and it is switched to an idle long-distance line. An idle line emits a constant 2600-cycle whistling tone, the signal that it is ready to receive a call. As the caller finishes dialing the desired number-called the address digits-the call is completed-all of which takes place in the time it takes to punch in the number.

At the local office, billing begins when the long-distance call is answered and ends when the caller puts his receiver down. The act of hanging up is the signal to the local office that the call is completed. The local office then tells the line that it can process any other call by sending it the same 2600-cycle tone, and the line begins emitting the tone again.

A phreaker made his free call by first accessing, say, the 1-800 number for Holiday Inn. His local office noted that it was processing a long-distance call, found an idly whistling line, and marked the call down as routed to a free number. At that point, before Holiday Inn answered, the phreaker pressed a button on his MF-er, which reproduced Bell's 2600-cycle whistle. This signified that the Holiday Inn call had been completed-or that the caller had hung up prior to getting an answer-and it stood by to accept another call.

But at the local office no hanging-up signal had been received; hence the local office presumed the Holiday Inn call was still going through. The phreaker, still connected to a patiently whistling long-distance line, then punched in the address digits of any number he wanted to be connected to, while his local office assumed that he was really making a free call.

Blue boxes could also link into forbidden areas in the Bell system. Users of MF-ers soon discovered that having a merrily whistling trunk line at their disposal could open many more possibilities than just free phone calls: they could dial into phonecompany test switches, to long-distance route operators, and into conference lines-which meant they could set up their own phreaker conference calls. Quite simply, possession of a blue box gave the user the same control and access as a Bell operator. When operator-controlled dialing to Europe was introduced in 1963, phreakers with MF-ers found they could direct-dial across the Atlantic, something ordinary subscribers couldn't do until 1970.

The only real flaw with blue boxes was that Bell Telephone's accounts department might become suspicious of subscribers who seemed to spend a lot of time connected to the 1-800 numbers of, say, Holiday Inn or the army recruiting office and might begin monitoring the line. Phreaking, after all, was technically theft of service, and phreakers could be prosecuted under various state and federal laws.

To get around this, canny phreakers began to use public phone booths, preferably isolated ones. The phone company could hardly monitor every public telephone in the United States, and even when the accounts department realized that a particular pay phone had been used suspiciously, the phreaker would have long since disappeared.

By the late 1960s blue boxes had become smaller and more portable. The bulky vacuum tubes mounted on a metal chassis had been replaced by transistors in slim boxes only as large as their keypads. Some were built to look like cigarette packs or transistor radios. Cleverer ones-probably used by drug dealers or bookies-were actually working transistor radios that concealed the components of an operational blue box within their wiring.

What made Bell's technology particularly vulnerable was that almost anything musical could be used to reproduce the tone frequencies. Musical instruments such as flutes, horns, or organs could be made to re-create Bell's notes, which could then be taped, and a simple cassette player could serve as a primitive MF device. One of the easiest ways to make a free call was to record the tones for a desired number in the correct sequence onto a cassette tape, go to a phone, and play the tape back into the mouthpiece. To Bell's exasperation, some people could even make free phone calls just by whistling.

Joe Engressia, the original whistling phreaker, was blind, and was said to have been born with perfect pitch. As a child he became fascinated by phones: he liked to dial nonworking numbers around the country just to listen to the recording say, "This number is not in service." When he was eight, he was accidentally introduced to the theory of multifrequency tones, though he didn't realize it at the time. While listening to an out-of-service tape in Los Angeles, he began whistling and the phone went dead. He tried it again, and the same thing happened. Then he phoned his local office and reportedly said, "I'm Joe. I'm eight years old and I want to know why when I whistle this tune, the line clicks off."

The engineer told Joe about what was sometimes known as talk-off, a phenomenon that happened occasionally when one party to a conversation began whistling and accidentally hit a 2600-cycle tone. That could make the line think that the caller had hung up, and cause it to go dead. Joe didn't understand the explanation then, but within a few years he would probably know more about it than the engineer.

Joe became famous in 1971 when Ron Rosenbaum catalogued his phreaking skills in the Esquire article. But he had first come to public attention two years earlier, when he was discovered whistling into a pay phone at the University of South Florida. Joe, by this time a twenty-year-old university student, had mastered the science of multifrequency tones and, with perfect pitch, could simply whistle the 2600-cycle note down the line, and then whistle up any phone number he wanted to call. The local telephone company, determined to stamp out phreaking, had publicized the case, and Joe's college had disciplined him. Later, realizing that he was too well known to the authorities to continue phreaking in Florida, he moved on to Memphis, which was where Rosenbaum found him.

In 1970 Joe was living in a small room surrounded by the paraphernalia of phreaking. Even more than phreaking, however, Joe's real obsession was the phone system itself. His ambition, he told Rosenbaum, was to work for Ma Bell. He was in love with the phone system, and his hobby, he claimed, was something he called phone tripping: he liked to visit telephone switching stations and quiz the company engineers about the workings of the system. Often he knew more than they did. Being blind, he couldn't see anything, but he would run his hands down the masses of wiring coiled around the banks of circuitry. He could learn how the links were made just by feeling his way through the connections in the wiring, and in this way, probably gained more knowledge than most sighted visitors.

Joe had moved to Tennessee because that state had some interesting independent phone districts. Like many phreakers, Joe was fascinated by the independents– small, private phone companies not controlled by Bell-because of their idiosyncrasies. Though all of the independents were linked to Bell as part of the larger North American phone network, they often used different equipment (some of it older), or had oddities within the system that phreakers liked to explore.

By that time the really topflight phreakers were more interested in exploring than making free calls. They had discovered that the system, with all of its links, connections, and switches, was like a giant electronic playground, with tunnels from one section to another, pathways that could take calls from North America to Europe and back again, and links that could reach satellites capable of beaming calls anywhere in the world.

One of the early celebrated figures was a New York-based phreaker who used his blue box to call his girlfriend in Boston on weekends-but never directly. First he would call a 1-800 number somewhere in the country, skip out of it onto the international operator's circuit, and surface in Rome, where he would redirect the call to an operator in Hamburg. The Hamburg operator would assume the call originated in Rome and accept the instructions to patch it to Boston. Then the phreaker would speak to his girlfriend, his voice bouncing across the Atlantic to a switch in Rome, up to Hamburg, and then back to Boston via satellite. The delay would have made conversation difficult, but, of course, conversation was never the point.

Few phreakers ever reached that level of expertise. The community was never huge-there were probably never more than a few hundred real, diehard phone phreaks-but it suddenly began to grow in the late 1960s as the techniques became more widely known. Part of the impetus for this growth was the increased access to conference lines, which allowed skills and lore to be more widelv disseminated.

Conference lines-or conference bridges-are simply special switches that allow several callers to participate in a conversation at the same time. The service, in those days, was generally promoted to businesses, but bridges were also used by the telephone company for testing and training. For instance, the 1121 conference lines were used to train Bell operators: they could dial the number to hear a recording of calls being made from a pay phone, including the pings of the coins as they dropped, so that they could become familiar with the system. If two phreakers rang any one of the 1121 training numbers, they could converse, though the constant pinging as the coins dropped on the recording was distracting.

Far better were lines like 2111, the internal company code for Telex testing. For six months in the late 1960s phreakers congregated on a disused 2111 test line located somewhere in a telephone office in Vancouver. It became an enormous clubhouse, attracting both neophyte and experienced MF-ers in a continuing conference call. To participate, phreakers needed only to MF their way through a 1-800 number onto the Vancouver exchange and then punch out 2111.

The clubhouse may have existed only in the electronic ether around a test number in a switching office somewhere in Canada, but it was a meeting place nonetheless.

Joe Engressia's life in Memphis revolved around phreaker conference lines, but when Rosenbaum talked to him, he was getting worried about being discovered.

"I want to work for Ma Bell. I don't hate Ma Bell the way some phone phreaks do. I don't want to screw Ma Bell. With me it's the pleasure of pure knowledge.

There's something beautiful about the system when you know it intimately the way I do. But I don't know how much they know about me here. I have a very intuitive feel for the condition of the line I'm on, and I think they're monitoring me off and on lately, but I haven't been doing much illegal…. Once I took an acid trip and was havin these auditory hallucinations . . . and all of a sudden I had to phone phreak out of there. For some reason I had to call Kansas City, but that's all."

Joe's intuition was correct: he was indeed being monitored. Shortly after that interview, agents from the phone company's security department, accompanied by local police, broke into his room and confiscated every bit of telecommunications equipment. Joe was arrested and spent the night in jail.

The charges against him were eventually reduced from possession of a blue box and theft of service to malicious mischief. His jail sentence was suspended. But in return he had to promise never to phreak again-and to make sure he kept his promise, the local phone company refused to restore his telephone line.2

One of Joe's friends at that time was a man called John Draper, better known as Captain Crunch. Like Joe, Draper was interested in the system: he liked to play on it, to chart out the links and connections between phone switching offices, overseas lines, and satellites. His alias came from the Quaker Oats breakfast cereal Cap'n Crunch, which once, in the late 1960s, had included a tiny plastic whistle in each box as a children's toy. Unknown to the company, it could be used to phreak calls.

The potential of the little whistle was said to have been discovered by accident. The toy was tuned to a high-A note that closely reproduced the 2600-cycle tone used by Bell in its long-distance lines. Kids demonstrating their new toy over the phone to Granny in another city would sometimes find that the phone went dead, which caused Bell to spend a perplexing few weeks looking for the source of the problem.

Draper first became involved with phreaking in 1969, when he was twenty-six and living in San Jose. One day he received what he later described as a "very strange call" from a man who introduced himself as Denny and said he wanted to show him something to do with musical notes and phones. Intrigued, Draper visited Denny, who demonstrated how tones played on a Hammond organ could be recorded and sent down the line to produce free long-distance calls. The problem was that a recording had to be made for each number required, unless Draper, who was an electronics engineer, could build a device that could com– bine the abilities of the organ and the recorder. The man explained that such a device would be very useful to a certain group of blind kids, and he wanted to know if Draper could help.

After the meeting Draper went home and immediately wired up a primitive multifrequency transmitter-a blue box. The device was about the size of a telephone. Ironically it wouldn't work in San Jose (where long-distance calls were still routed through an operator), so Draper had to drive back to San Francisco to demonstrate it. To his surprise it worked perfectly. "Stay low," he told the youngsters. "This thing's illegal."

But the blind kids were already into phreaking in a big way. They had already discovered the potential of the Cap'n Crunch plastic whistle, and had even found that to make it hit the 2600cycle tone every time, all it needed was a drop of glue on the outlet hole.

Draper began to supply blue boxes to clients-generally unsighted youngsters– in the Bay Area and beyond. He was also fascinated by the little whistle, and early the next year, when he took a month's vacation in England, he took one with him. When a friend rang him from the States, Draper blew the little toy into the phone, sending the 2600-cycle "on-hook" (hanging-up) signal to the caller's local office in America. The "on-hook" tone signified that the call had been terminated, and the U.S. office-where billing was originated-stopped racking up toll charges. But because the British phone system didn't respond to the 2600-cycle whistle, the connection was maintained, and Draper could con– tinue the conversation for free. He only used the whistle once in England, but the incident became part of phreaker legend and gave Draper his alias.

While in Britain, Draper received a stream of transatlantic calls from his blind friends. One of them, who lived in New York, had discovered that Bell engineers had a special code to dial England to check the new international direct-dial system, which was just coming on-line. The access code was 182, followed by a number in Britain. All of the calls placed in this way were free. The discovery spread rapidly among the phreaker community; everyone wanted to try direct-dialing to England, but since no one knew anyone there, Draper was the recipient of most of the calls.

This was, of course, long before the days when people would routinely make international calls. Even in America, where the phone culture was at its most developed, no one would casually pick up a telephone and call a friend halfway across the world. An international call, particularly a transatlantic call, was an event, and if families had relatives abroad, they would probably phone them only once a year, usually at Christmas. The call could easily take half a day to get through, the whole family would take turns talking, and everyone would shout-in those days, perhaps in awe of the great distance their voices were being carried, international callers always shouted. It would take another two decades before transatlantic calls became as commonplace as ringing across the country.

Naturally the British GPO (General Post Office), who ran the U.K. telephone system in those days, became somewhat suspicious of a vacationer who routinely received five or six calls a day from the United States. They began monitoring Draper's line; then investigators were sent to interview him. They wanted to know why he had been receiving so many calls from across the Atlantic. He replied that he was on holiday and that he supposed he was popular, but the investigators were unimpressed. Draper immediately contacted his friends in America and said, "No more."

At about this time, Draper had become the king of phreakers. He had rigged up a VW van with a switchboard and a high-tech MF-er and roamed the highways in California looking for isolated telephone booths. He would often spend hours at these telephones, sending calls around the world, bouncing them off communications satellites, leapfrogging them from the West Coast to London to Moscow to Sydney and then back again.

The Captain also liked to stack up tandems, which are the instruments that send the whistling tone from one switching office to another. What the Captain would do is shoot from one tandem right across the country to another, then back again to a third tandem, stacking them up as he went back and forth, once reportedly shooting across America twenty times. Then he might bounce the call over to a more exotic place, such as a phone box in London's Victoria Station, or to the American embassy in Moscow. He didn't have anything to say to the startled commuter who happened to pick up the phone at Victoria, or to the receptionist at the embassy in Moscow-that wasn't the point. Sometimes he simply asked about the weather.

The unit he carried in the back of the van was computer operated, and Draper was proud of the fact that it was more powerful and faster than the phone company's own equipment. It could, he claimed, "do extraordinary things," and the vagueness of the statement only added to the mystique.

Once, making a call around the world, he sent a call to Tokyo, which connected him to India, then Greece, then South Africa, South America, and London, which put him through to New York, which connected him to an L.A. operator-who dialed the number of the phone booth next to the one he was using. He had to shout to hear himself but, he claimed, the echo was "far out." Another time, using two phone booths located side by side, Draper sent his voice one way around the world from one of the telephones to the other, and simultaneously from the second phone booth he placed a call via satellite in the other direction back to the first phone. The trick had absolutely no practical value, but the Captain was much more interested in the mechanics of telecommunications than in actually calling anyone. "I'm learning about a system," he once said. "The phone company is a system, a computer is a system. Computers and systems– that's my bag."

But by this time the Captain was only stating the obvious. To advanced phreakers the system linking the millions of phones around the world-that spider's web of lines, loops, and tandems-was infinitely more interesting than anything they would ever hope to see. Most of the phreakers were technology junkies anyway, the sort of kids who took apart radios to see how they worked, who played with electronics when they were older, and who naturally progressed to exploring the phone system, if only because it was the biggest and best piece of technology they could lay their hands on. And the growing awareness that they were liberating computer technology from Ma Bell made their hobby even more exciting.

In time even Mark Bernay, who had helped spread phone phreaking across America, found that his interests were changing. By 1969, he had settled in the Pacific Northwest and was working as a computer programmer in a company with access to a large time-share mainframe-a central computer accessed by telephone that was shared among hundreds of smaller companies. Following normal practice, each user had his own log-in-identification code, or ID-and password, which he would need to type in before being allowed access to the computer's files. Even then, to prevent companies from seeing each other's data, users were confined to their own sectors of the computer.

But Bernay quickly tired of this arrangement. He wrote a program that allowed him to read everyone else's ID and password, which he then used to enter the other sectors, and he began leaving messages for users in their files, signing them "The Midnight Skulker." He didn't particularly want to get caught, but he did want to impress others with what he could do; he wanted some sort of reaction. When the computer operators changed the passwords, Bernay quickly found another way to access them. He left clues about his identity in certain files, and even wrote a program that, if activated, would destroy his own passwordcatching program. He wanted to play, to have his original program destroyed so that he could write another one to undo what he had, in effect, done to himself, and then reappear. But the management refused to play. So he left more clues, all signed by "The Midnight Skulker."

Eventually the management reacted: they interrogated everyone who had access to the mainframe, and inevitably, one of Bernay's colleagues fingered him. Bernay was fired.

When Rosenbaum wrote his article in 1971 the practice of breaking into computers was so new and so bizarre, it didn't even have a name. Rosenbaum called it computer freaking-thef used to distinguish it from ordinary phone phreaking. But what was being described was the birth of hacking.

It was Draper, alias Captain Crunch, who, while serving a jail sentence, unintentionally spread the techniques of phreaking and hacking to the underworld-the real underworld of criminals and drug dealers. Part of the reason Draper went to jail, he now says, was because of the Esquire article: "I knew I was in trouble as soon as I read it." As a direct result of the article, five states set up grand juries to investigate phone phreaking and, incidentally, Captain Crunch's part in it. The authorities also began to monitor Draper's movements and the phones he used. He was first arrested in 1972, about a year after the article appeared, while phreaking a call to Sydney, Australia. Typically, he wasn't actually speaking to anyone; he had called up a number that played a recording of the Australian Top Ten.

Four years later he was convicted and sent to Lompoc Federal Prison in California for two months, which was where the criminal classes first learned the details of his techniques. It was, he says, a matter of life or death. As soon as he was inside, he was asked to cooperate and was badly beaten up when he refused. He realized that in order to survive, he would have to share his knowledge. In jail, he figured, it was too easy to get killed. "It happens all the time. There are just too many members of the 'Five Hundred Club,' guys who spend most of their time pumping iron and lifting five-hundred-pound weights,"

he says.

So he picked out the top dog, the biggest, meanest, and strongest inmate, as his protector. But in return Draper had to tell what he knew. Every day he gave his protector a tutorial about phreaking: how to set up secure loops, or eavesdrop on other telephone conversations. Every day the information was passed on to people who could put it to use on the outside. Draper remains convinced that the techniques that are still used by drug runners for computer surveillance of federal agents can be traced back to his tutorials.

But criminals were far from the only group to whom Draper's skills appealed. Rosenbaum's 1971 article introduced Americans for the first time to a new high-tech counterculture that had grown up in their midst, a group of technology junkies that epitomized the ethos of the new decade. As the sixties ended, and the seventies began, youth culture-that odd mix of music, fashion, and adolescent posturing-had become hardened and more radical. Woodstock had succumbed to Altamont; Haight-Ashbury to political activism; the Berkeley Free Speech Movement to the Weathermen and the Students for a Democratic Society.

Playing with Ma Bell's phone system was too intriguing to be dismissed as just a simple technological game. It was seen as an attack on corporate America-or "Amerika," as it was often spelt then to suggest an incipient Nazism within the state-and phreaking, a mostly apolitical pastime, was adopted by the radical movement. It was an odd mix, the high-tech junkies alongside the theatrical revolutionaries of the far left, but they were all part of the counterculture.

Draper himself was adopted by the guru of the whole revolutionary movement. Shortly after his arrest, he was contacted by Abbie Hoffman, the cofounder of the Youth International Party Line (YIPL). Hoffman invited Draper to attend the group's 1972 national convention in Miami and offered to organize a campaign fund for his defense.

At the time Hoffman was the best-known political activist in America. An anti-Vietnam war campai~ner, a defendant in the Chicago Seven trial, he had floated YIPL in 1971 as the technical offshoot of his radical Yippie party. Hoffman had decided that communications would be an important factor in his revolution and had committed the party to the "liberation" of Ma Bell, inevitably portrayed as a fascist organization whose influence needed to be stemmed.

YIPL produced the first underground phreaker newsletter, initially under its own name. In September 1973 it became TAP, an acronym that stood for Technological Assistance Program. The newsletter provided its readers with information on telephone tapping and phreaking techniques and agitated against the profits being made by Ma Bell.

Draper went to the YIPL convention, at his own expense, but came back empty-handed. It was, according to Draper, "a total waste of time," and the defense fund was never organized. But ironically, while the political posturing of the radicals had little discernible effect on the world, the new dimensions of technology-represented, if imperfectly, by the phreakers-would undeniably engender a revolution.

Before he went to jail, Draper was an habitue of the People's Computer Company (PCC), which met in Menlo Park, California. Started in 1972 with the aim of demystifying computers, it was a highly informal association, with no members as such; the twenty-five or so enthusiasts who gathered at PCC meetings would simply be taught the mysteries of computing, using an old DEC machine. They also hosted pot-luck dinners and Greek dances; it was as much a social club as a computer group.

But there was a new buzz in the air: personal computers, small, compact machines that could be used by anyone. A few of the PCC-ites gathered together to form a new society, one that would "brew" their own home computers, which would be called the Homebrew Computer Club. Thirty-two people turned up for the inaugural meeting of the society on March 5, 1975, held in a garage in Menlo Park.

The club grew exponentially, from sixty members in April to one hundred and fifty in May. The Homebrewers outgrew the Menlo Park garage and, within four months, moved to an auditorium on the Stanford campus. Eventually, Homebrew boasted five or six hundred members. With Haight-Ashbury down the road and Berkeley across the bay, the club members shared the countercultural attitudes of the San Francisco area. The club decried the "commercialization" of computers and espoused the notion of giving computer power to the people.

In those days the now-ubiquitous personal computer was making its first, tentative appearance. Before the early 1970S, computers were massive machines, called mainframes because the electronic equipment had to be mounted on a fixed frame. They were kept in purpose-built, climate-controlled blocks and were operated by punch card or paper tape; access was limited-few knew enough about the machines to make use of them anyway-and their functions were limited. The idea of a small, lightweight computer that was cheap enough to be bought by any member of the public was revolutionary, and it was wholeheartedly endorsed by the technological radicals as their contribution to the counterculture. They assumed that moving computing power away from the government and large corporations and bringing it to the public could only be a good thing.

The birthplace of personal computing is widely believed to be a shop sandwiched between a Laundromat and a massage parlor in a run-down suburban shopping center in Albuquerque, New Mexico. It was there, in the early 1970S, that a small team of self-proclaimed rebels and misfits designed the first personal computer, the Altair 8800, which was supposedly named after one of the brightest stars in the universe. Formally launched in January 1975, it was heralded by Popular Electronics as "the first minicomputer kit to rival commercial models," and it cost $395.

The proclaimed mission of the Altair design team was to liberate technology, to "make computing available to millions of people and do it so fast that the US Stupid Government [sic] couldn't do anything about it." They believed that Congress was about to pass a law requiring operators to have a license before programming a computer. "We figured we had to have several hundred machines in people's hands before this dangerous idea emerged from committee. Otherwise, 1984 would really have been 1984," said David Bunnell, a member of the original design team.

The group looked upon the personal computer, in Bunnell's words, as "just as important to New Age people as the six-shooter was to the original pioneers. It was our six-shooter. A tool to fight back with. The PC gave the little guy a fighting chance when it came to starting a business, organizing a revolution, or just feeling powerful."

In common with other early PCs, the Altair was sold in kit form, limiting its appeal to hobbyists and computer buffs whose enthusiasm for computing would see them through the laborious and difficult process of putting the machines together. Once assembled, the kit actually did very little. It was a piece of hardware; the software-the programs that can make a PC actually do something, such as word processing or accounting didn't exist. By present-day standards it also looked forbidding, a gray box with a metallic cover housing a multitude of LED lights and switches. The concept of "user-friendliness" had not yet emerged.

The launch of the Altair was the catalyst for the founding of the Homebrew Computer Club. Motivated by the success of the little machine, the members began working on their own designs, using borrowed parts and operating systems cadged from other computers. Two members of the club, however, were well ahead of the others. Inspired by Rosenbaum's article in Esquire, these two young men had decided to build their own blue boxes and sell them around the neighboring Stanford and Berkeley campuses. Though Rosenbaum had deliberately left out much of the technical detail, including the multifrequency tone cycles, the pair scratched together the missing data from local research libraries and were able to start manufacturing blue boxes in sizeable quantities. To keep their identities secret, they adopted aliases: Steve Jobs, the effusive, glib salesman of the two, became Berkeley Blue; Steve Wozniak, or Woz, the consummate technician, became-as far as he can remember– Oak Toebark. The company they founded in Jobs's parents' garage was to become Apple Computer.

The duo's primitive blue-box factory began to manufacture MF-ers on nearly an assembly-line basis. Jobs, whose sales ability was apparent even then, managed to find buyers who would purchase up to ten at a time. In interviews given since, they estimated that they probably sold a couple hundred of the devices. Under California law at the time, selling blue boxes was perfectly legal, although using them was an offense. They got close to getting caught only once, when they were approached by the highway patrol while using one of their own blue boxes at a telephone booth. They weren't arrested-but only because the patrolman didn't recognize the strange device they had with them.

The two Steves had grown up in the area around Los Altos, part of that stretch of Santa Clara County between San Francisco and San Jose that would later become known as Silicon Valley. They had both been brought up surrounded by the ideas and technology that were to transform the area: Wozniak's father was an electronics engineer at Lockheed Missiles and Space Company and helped his son learn to design logic circuits. When the two boys first met, Jobs was particularly impressed that Wozniak had already built a computer that had won the top prize at the Bay Area science fair.

It has been said that Jobs and Wozniak were the perfect team, and that without Jobs, the entrepreneur, Woz would never have outgrown Homebrew. Wozniak was, at heart, a hacker and a phreaker; at the club he liked to swap stories with Draper, and he once tried to phreak a call to the pope by pretending to be Henry Kissinger. Before a Vatican official caught on, he had almost succeeded in getting through. Jobs, on the other hand, was first and foremost a businessman. He needed Wozniak to design the products-the blue boxes, the computers-for him to sell.

The Apple computer happened almost by accident. Had he had enough money, Woz would have been happy to go out and buy a model from one of the established manufacturers. But he was broke, so he sat down and began designing his own homemade model.

He had set out to build something comparable to the desktop computer he used at Hewlett-Packard, where he worked at the time. That computer was called the 9830 and sold for $10,000 a unit. Its biggest advantage was that it used BASIC, a computer language that closely resembles normal English. BASIC alleviated a lot of complications: a user could sit down, turn on the machine, and begin typing, which wasn't always possible with other computer languages.

BASIC-an acronym for Beginner's All-purpose Symbolic Instruction Code-had already been adapted by software pioneers Bill Gates and Paul Allen for use on the Altair. (Gates-soon to become America's youngest billionaire-and Allen went on to found Microsoft, probably the world's most powerful software company.) The language was compact, in that it required very little computer memory to run, an essential requirement for microcomputers. Woz began work on his new computer by adapting BASIC to run with a microprocessor-a sort of mini computer brain, invented earlier in the decade, which packed all the functions of the central processing unit (CPU) of a large computer onto a tiny semiconductor chip. The invention allowed the manufacture of smaller computers, but attracted little attention from traditional computer companies, who foresaw no market at all for PCs. All the action in those days was with mainframes.

Woz's prototype was first demonstrated to the self-proclaimed radicals at the Homebrew Club, who liked it enough to place a few orders. Even more encouraging, the local computer store, the Byte Shop, placed a single order for $50,000 worth of the kits.

The Byte Shop was one of the first retail computer stores in the world. and its manager knew that a fully assembled, inexpensive home computer would sell very well. The idea was suggested to Jobs, who began looking for the financial backing necessary to turn the garage assembly operation he and Woz now ran into a real manufacturing concern.

How the two Steves raised the money for Apple has been told before. Traditional manufacturers turned them down, and venture capitalists had difficulty seeing beyond appearance and philosophy. It was a clash of cultures. Jobs and Woz didn't look like serious computer manufacturers; with their long hair and stan– dard uniform of sandals and jeans, they looked like student radicals. One venture capitalist, sent out to meet Jobs at the garage, described him as an unusual business prospect, but eventually they did find a backer.

The first public showing of what was called the Apple II was at the West Coast Computer Fair in San Francisco in April 1977. The tiny company's dozen or so employees had worked through the night to prepare the five functioning models that were to be demonstrated. They were sleek little computers: fully assembled, light, wrapped in smart gray cases with the six-color Apple logo discreetly positioned over the keyboard. What would set them apart in particular, though, was their floppy-disk capability, which became available on the machines in 1979.

The floppy disk-or diskette-is a data-storage system developed for larger computers. The diskette itself is a thin piece of plastic, protected by a card cover, that looks a little like a 45-rpm record, and is used to load programs or to store data. Prior to the launch of the Apple II, all microcomputers used cassette tapes and ordinary cassette recorders for data storage, a time-consum– ing and inefficient process. The inclusion of the floppy-disk system gave the Apple II a competitive edge: users would no longer need to fiddle about with tapes and recorders, and the use of diskettes, as well as the simple operating system that Woz had built into the computer, encouraged other companies to write software for the new machines.

This last development more than anything else boosted the Apple II out of the hobbyist ghetto. The new Apple spawned a plethora of software: word-processing packages, graphics and arts programs, accounting systems, and computer games. The launch two years later of the VisiCalc spread sheet, a business forecasting program, made the Apple particularly attractive to corporate users.

Even Captain Crunch wrote software for the Apple II. At the time, in 1979, he was incarcerated in Northampton State Prison in Pennsylvania for a second phreaking offense. While on a rehabilitation course that allowed him access to a computer he developed a program called EasyWriter, one of the first word– processing packages, which for a short time became the secondbest-selling program in America. Draper went on to write other applications, marketed under the "Captain Software" label.

The Apple II filled a niche in the market, one that traditional computer manufacturers hadn't realized was there. The Apple was small and light, it was easy to use and could perform useful functions. A new purchaser could go home, take the components out of their boxes, plug them in, load the software, then sit down and write a book, plot a company's cash flow, or play a game.

By any standards Apple's subsequent growth was phenomenal. In its first year of operation, 1977, it sold $2.5 million worth of computers. The next year sales grew to $15 million, then in 1979 to $70 million. In 1980 the company broke through the $100 million mark, with sales of $117 million. The figures continued to rise, bounding to $335 million in 1981 and $583 million in 1982. Along the way the founders of Apple became millionaires, and in 1980, when the company went public, Jobs became worth $165 million and Wozniak $88 million.

The story of Apple, though, isn't just the story of two young men who made an enviable amount of money. What Jobs and Wozniak began with their invention was a revolution. Bigger than Berkeley's Free Speech Movement and "the summer of love" in Haight-Asbury, the technological revolution represented by the personal computer has brought a real change to society. It gave people access to data, programs, and computing power they had never had before. In an early promotional video for Apple, an earnest employee says, "We build a device that gives people the same power over information that large corporations and the government have over people."

The statement deliberately echoes the "power to the people" anthem of the sixties, but while much of the political radicals' time was spent merely posturing, the technological revolutionaries were delivering a product that brought the power of information to the masses. That the technological pioneers became rich and that the funky little companies they founded turned into massive corporations is perhaps testament to capitalism's capacity to direct change, or to coopt a revolution.

Apple was joined in the PC market by hundreds of other companies, including "Big Blue" itself-IBM. When the giant computer manufacturer launched its own PC in 1981, it expected to sell 250,000 units over five years. Again, the popular hunger for computing power was underestimated. In a short while, IBM was selling 250,000 units a month. Penetration of personal computers has now reached between 15 and 35 percent of all homes in the major industrialized countries. There are said to be 50 to 90 million PCs in use in homes and offices throughout the world, and the number is still rising.

And though the PC revolution would probably have happened without Wozniak and Jobs, it may not have happened as quickly. It's worth remembering that the catalyst for all this was a magazine article about phreaking.

Computers are more than just boxes that sit on desks. Within the machines and the programs that run them is a sort of mathematical precision that is breathtaking in the simplicity of its basic premise. Computers work, essentially, by routing commands, represented by electrical impulses, through a series of gates that can only be open or closed-nothing else. Open or closed; on or off. The two functions are represented symbolically as 1 (open/ on) or 0 (closed/off). The route the pulse takes through the gates determines the function. It is technology at its purest: utter simplicity generating infinite complexity.

The revolution that occurred was over the control of the power represented by this mathematical precision. And the argument is still going on, although it is now concerned not with the control of computers but with the control of information. Computers need not be isolated: with a modem-the boxlike machine that converts computer commands to tones that can be carried over the phone lines-they can be hooked up to vast networks of mainframe computers run by industry, government, universities, and research centers. These networks, all linked by telephone lines, form a part of a cohesive international web that has been nicknamed Worldnet. Worldnet is not a real organization: it is the name given to the international agglomeration of computers, workstations, and networks, a mix sometimes called information technology. Access to Worldnet is limited to those who work for the appropriate organizations, who have the correct passwords, and who are cleared to receive the material available on the network.

For quite obvious reasons, the companies and organizations that control the data on these networks want to restrict access, to limit the number of people wandering through their systems and rifling through their electronic filing cabinets. But there is a counterargument: the power of information, the idealists say, should be made available to as many people as possible, and the revolution wrought by PCs won't be complete until the data and research available on computer networks can be accessed by all.

This argument has become the philosophical justification for hacking-although in practice, hacking usually operates on a much more mundane level. Hacking, like phreaking, is inspired by simple curiosity about what makes the system tick. But hackers are often much more interested in accessing a computer just to see if it can be done than in actually reading the information they might find, just as phreakers became more interested in the phone company than in making free calls. The curiosity that impelled phreakers is the same one that fuels hackers; the two groups merged neatly into one high-tech subculture.

Hacking, these days, means the unauthorized access of computers or computer systems. Back in the sixties it meant writing the best, fastest, and cleverest computer programs. The original hackers were a bunch of technological wizards at MIT, all considered among the brightest in their field, who worked together writing programs for the new computer systems then being developed. Their habits were eccentric: they often worked all night or for thirty-six hours straight, then disappeared for two days. Dress codes and ordinary standards were overlooked: they were a disheveled, anarchic bunch. But they were there to push back the frontiers of computing, to explore areas of the new technology that no one had seen before, to test the limits of computer science.

In the early eighties, the computer underground, like the computer industry itself, was centered in the United – States. But technology flows quickly across boundaries, as do fads and trends, and the ethos of the technological counterculture became another slice of Americana that, like Hollywood movies and Coca-Cola, was embraced internationally.

Although the United States nurtured the computer underground, the conditions that spawned it existed in other countries as well. There were plenty of young men all over the world who would become obsessed with PC technology and the vistas it offered, and many who would be attracted to the new society, with its special jargon and rituals. The renegade spirit that created the computer underground in the first place exists worldwide.

In 1984, the British branch of the technological counterculture probably began with a small group that used to meet on an ad hoc basis in a Chinese restaurant in North London. The group had a floating membership, but usually numbered about a dozen; its meetings were an excuse to eat and drink, and to exchange hacker lore and gossip.

Steve Gold, then a junior accountant with the Regional Health Authority in Sheffield and a part-time computer journalist, was twenty-five, and as one of the oldest of the group, had been active when phone phreaking first came to England. Gold liked to tell stories about Captain Crunch, the legendary emissary from America who had carried the fad across the Atlantic.